November 3, 2023

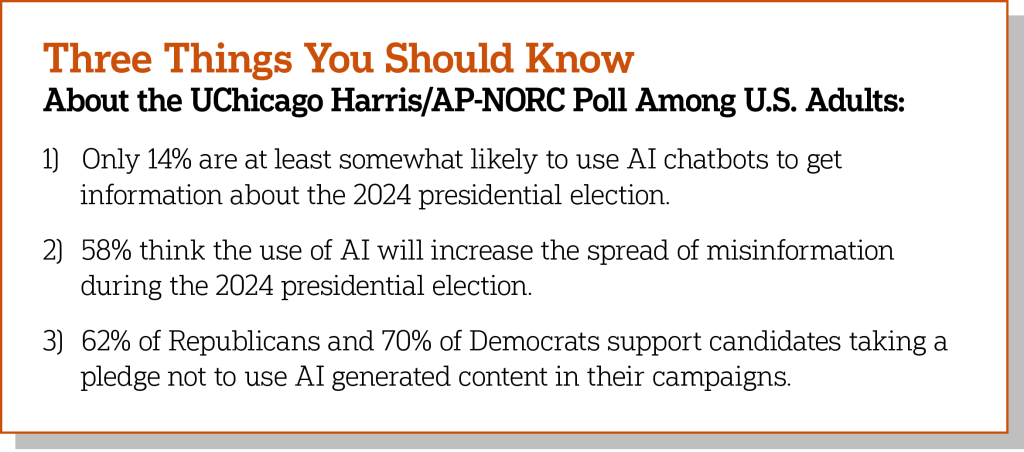

Only 14% of adults are even somewhat likely to use artificial intelligence (AI) to get information about the upcoming presidential election, and a majority are concerned about AI increasing the spread of election misinformation, according to a new UChicago Harris/AP-NORC Poll.

AI, which is designed to be capable of performing tasks that humans can do such as recognizing speech or pictures, is a relatively unfamiliar technology for most people. Fifty-four percent of adults have not read or heard much at all about AI and just 30% report they have used an AI chatbot or image generator. Younger adults are more familiar with AI, but they are just as skeptical as older adults about its use in the 2024 election.

There is a bipartisan consensus that the use of AI by either voters or candidates would be more of a bad thing than a good thing, although the pubic is split on using AI chatbots to find information about how to register to vote. Fewer than 1 in 10 report it would be a good thing for voters to use chatbots to decide who to vote for (8%), for candidates to tailor political advertisements to individual voters (7%), or for candidates to use AI to edit or touch-up photos and videos (6%).

Adults are more likely to say it is a good thing for voters to use AI chatbots to find information about how to register to vote (37%) or find information on how to cast a ballot (26%), although studies show these are the types of information for which AI is less likely to provide reliable answers.

Overall, 58% of adults are concerned about the use of AI increasing the spread of false information during the 2024 presidential election, and those who more familiar with AI tools are more likely to say its use will increase the spread of misinformation (66% vs 51%).

Although younger adults have heard more about AI and are more likely to have used it than older adults, younger adults are no more likely than older adults to say they will use AI chatbots to get information about the election.

Most adults, regardless of age or partisanship, believe that technology companies (83%), social media companies (82%), the news media (80%), and the federal government (80%) share at least some responsibility when it comes to preventing the spread of misinformation by AI.

There is also bipartisan support when it comes to a variety of actions to address the use of AI. Majorities favor the federal government banning AI generated content that contains false or misleading images in political ads (66%), technology companies labeling all AI generated content made on their platforms (65%), and politicians and the groups that support them making a pledge not to use AI generated content in their campaigns (62%).

The nationwide poll was conducted by the University of Chicago Harris School of Public Policy and The Associated Press-NORC Center for Public Affairs Research from October 19 to October 23, 2023, using AmeriSpeak®, the probability-based panel of NORC at the University of Chicago. Online and telephone interviews using landlines and cell phones were conducted with 1,017 adults. The margin of sampling error is +/-4.1 percentage points.

Suggested Citation: AP-NORC Center for Public Affairs Research. (November 2023). “There Is Bipartisan Concern About the Use of AI in the 2024 Elections.” https://apnorc.org/projects/there-is-bipartisan-concern-about-the-use-of-ai-in-the-2024-elections